The annual deadline for AVETMISS reporting 2025 is approaching. Your Student Management System (SMS) is churning hard. You have likely finalised the easy data. The on-campus theory classes, the online modules, and the internal assessments. That data is clean because you control it. It happened under your roof, supervised by your staff, recorded on your systems. But you can’t submit it just yet. You are staring at a red error screen because of the one data set you do not fully control. Placements.

Every year, work-based learning is the final hurdle in the VET data submission marathon. It is the hardest data to chase, the hardest to verify, and the easiest to get wrong, according to the strict validation rules set by the NCVER.

If your organisation is still relying on paper logbooks, scattered spreadsheets, and hopeful emails to busy host employers to gather this critical evidence, January is not going to get any easier. You are trying to force analog, chaotic information into a digital, ordered system.

This article explains exactly why placement data is the technical Achilles heel of your annual submission and why trying to fix these errors now in January is a broken strategy that creates long-term governance risk.

The Anatomy of a Data Disaster

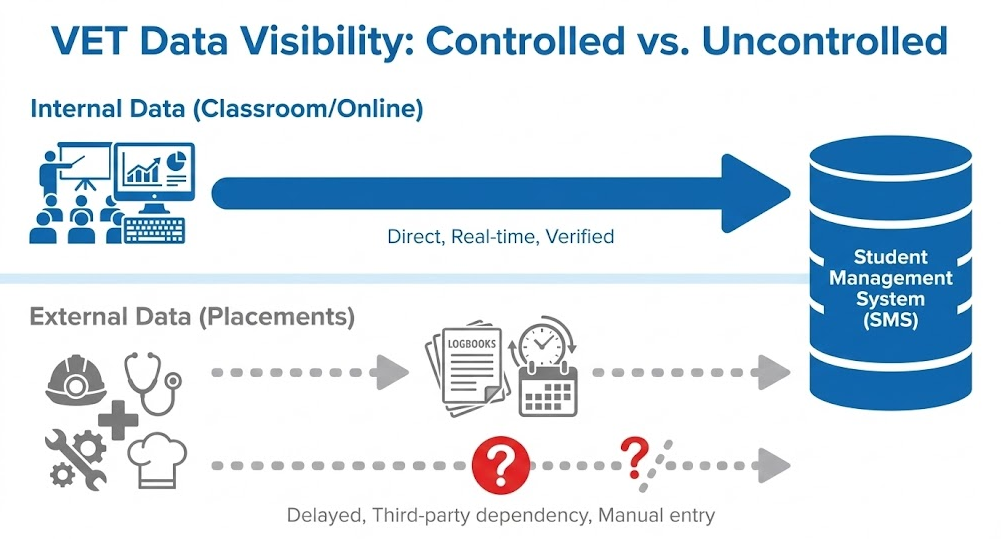

When a student sits in a classroom at your RTO, your trainer marks attendance. Your assessor marks competency. That data chain is short, secure, and goes straight into your SMS. It is internally generated and internally validated in near real-time.

When a student goes on a mandatory work placement, whether it's a construction site, an aged care facility, or a commercial kitchen, the data chain breaks.

You are now relying entirely on external third parties to generate critical compliance data for you. You are asking a busy site foreman, a stressed nurse unit manager, or a head chef to accurately record start times, finish times, and observed tasks on a piece of paper.

These host employers do not care about AVETMISS reporting 2025. They prioritise completing their tasks and maintaining a safe workplace. Filling out your RTO’s paperwork correctly is their lowest priority.

The Lag Time of Paper Creates Data Rot

In a paper-based system, the physical evidence of the placement might not return to the training Organisations until weeks or months after the activity occurred. A student finishes a crucial block of hours in March, but drops the paper logbook off at reception in June.

By the time your data team tries to enter this information, the trail is cold. This phenomenon creates significant operational risk.

If there is a discrepancy, a missing supervisor signature, an unclear date scrawled in pen, or a contradiction in total hours versus shifts worked, you cannot easily fix it. The student has moved on to another unit. The supervisor doesn't remember what happened three months ago and is reluctant to sign an amended form.

So the data gets entered with best guesses or left incomplete. It sits in your system like a time bomb, waiting for the January NAT file validation process to expose the errors. You aren't managing data in real-time; you are trying to piece together what happened six months ago based on fragments of unreliable evidence.

The Technical Bottleneck: Why NAT00120 Fails Validation

Let us get technical. When you run your final extract for NCVER or your State Training Authority, you aren't sending a vague summary of student activity. You are sending specific, highly structured NAT files that must adhere to rigid binary logic.

The placement data usually lives in the NAT00120 (Enrolment) file, and sometimes impacts the NAT00130 (Program Completed) file depending on how your Training and Assessment Strategy (TAS) is structured.

NCVER's validation rules do not care about your operational difficulties with host employers. They only care about the logic in the fields. If the logic fails, the file is rejected, and your funding or registration is put at risk.

Here are the three most common technical reasons why placement data derived from manual systems blocks submission in January.

1. The Date Logic Trap

The NAT00120 file requires a strict 'Activity Start Date' and 'Activity End Date' for every unit of competency or module.

In a placement setting, these dates are chaotic. A student might need 120 hours of placement to achieve competency. They might do two days in March, nothing in April, a full week in July, and finish the final remaining hours in November.

How does your paper logbook translate into those two mandatory date fields in your SMS?

If your data entry staff put the very first date the student attended and the very last date they attended, it looks to the data standard like they were on placement continuously for nine months. This frequently triggers validation warnings about excessive duration relative to the nominal hours of the unit.

Even worse, if your manual records are sloppy, you might find data entry errors where the Activity End Date is mistakenly keyed in before the Activity Start Date. Or perhaps the placement dates fall outside the overall 'Program Enrolment' dates in the NAT00130 file.

These date logic errors are endemic to manual placement tracking because paper doesn't force anyone to enter valid timestamps at the point of capture.

2. The USI Mismatch

Every RTO knows that you cannot submit data without a verified Unique Student Identifier (USI), a requirement mandated by the Student Identifiers Act 2014.

This is usually sorted out at enrollment. However, placements can expose cracks in this process. If the student details scrawled on a paper logbook don't perfectly match the exact verified legal data linked to their USI in your system, reconciling that record becomes a manual nightmare.

Furthermore, if you cannot verify the placement actually occurred because the paperwork is missing entirely, you cannot legitimately attach that activity to the student's USI record. You are stuck in limbo, unable to report the activity but knowing it probably happened.

3. The Outcome Code Validation Conflict

A critical AVETMISS friction point occurs at the intersection of placement evidence and unit outcome reporting.

Many RTOs utilise Outcome Code 70 (Continuing activity) as a holding pattern for placement units where field activity has ceased, but the evidentiary record (the paper logbook) has not yet been verified by an assessor.

This latency creates a critical validation failure in January. You cannot submit Code 70s for students who have completed their qualification. The data logic requires these to be converted to outcomes, typically Outcome 20 (Competent).

However, an assessor cannot validly apply Outcome 20 without immediate access to sufficient, authentic evidence, as required by the Rules of Evidence. If the paper logbook is missing, incomplete, or ambiguous, the unit status remains paralysed at Code 70.

This triggers a fatal AVETMISS error fix scenario: The submission is blocked because a NAT00130 record indicating 'Program Completed' is directly contradicted by NAT00120 records indicating 'Continuing activity' for mandatory placement units. The manual evidence chain fails to close the loop in time for the reporting deadline.

The Governance Risk of Data Fabrication

Facing validation blocks in January creates severe organisational pressure to resolve errors by any means necessary to meet the deadline.

In the absence of verifiable evidence stored in a secure system, this pressure frequently leads to ad-hoc data manipulation. From a regulatory perspective, this moves the RTO from administrative inefficiency into active non-compliance.

Common manifestations of this risk include:

- Falsifying Temporal Data: Entering estimated or "dummy" start/end dates into the SMS because exact dates cannot be retrieved from illegible paper records. This compromises the integrity of VET activity data.

- Breaching Rules of Evidence: Marking units as Competent (Outcome 20) to clear a validation error before the physical evidence has been received and authenticated by the assessor. This is a direct breach of Standard 1.8.

- Inaccurate "Nil Returns": Omitting placement activity data entirely for specific cohorts because the manual data collection failed. Submitting a nil return when activity occurred is a demonstrable breach of the Data Provision Requirements condition of registration.

ASQA’s move toward data-driven monitoring means they are increasingly looking for discrepancies between reported data and actual operational evidence. A clean NAT file submission that is unsupported by robust, accessible primary evidence is a significant governance failure waiting to be detected by an audit.

This is Incredibly Risky Territory

ASQA is aggressively moving towards a systemic oversight model in 2025 and 2026. They are looking for consistency between what your data says to NCVER and what is actually happening on the ground.

If you submit clean data to NCVER to pass validation, but a subsequent performance assessment reveals your actual evidence consists of messy, incomplete, or contradictory paper logbooks, you have demonstrated a failure of governance. You have proven that your data submission is a fabrication designed to pass validation, not an accurate reflection of student activity.

A nil return when activity occurred is a direct breach of the ASQA Data Provision Requirements of your registration. Trying to fix data quality issues at the submission stage is not compliance; it is crisis management.

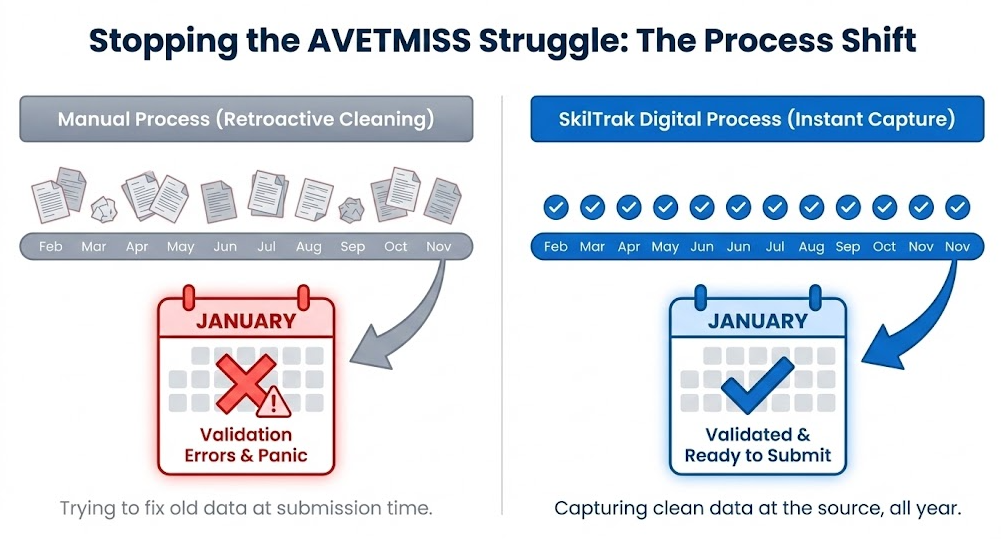

Stop Fixing Data. Start Fixing the Process

The pain you are feeling right now is not a failure of your data team. They are working hard to get as far as they have. It is a failure of your infrastructure. You are trying to use analog, disconnected tools to manage a highly technical, digital requirement.

The only way to stop the January AVETMISS struggle is to stop relying on paper to capture placement evidence. You need to move from manual data cleaning to instant data capture at the source.

This is where a dedicated placement management platform like SkilTrak changes the dynamic. By using digital logbooks and mobile supervisor sign-offs, you capture the data cleanly in the field.

Instant Timestamps: When a student clocks in on their mobile app, you get an exact, validated 'Activity Start Date'. When they clock out, you get an exact 'Activity End Date'. No guessing, no data entry errors, no logic failures.

Verified Evidence: Supervisors sign off digitally on the spot. The evidence is locked and loaded into your system immediately, not months later. This ensures you meet the new ASQA requirements for ongoing systemic oversight.

Ongoing Validation: Because the data flows into your system throughout the year, your Student Management System can validate it progressively. You spot and fix errors in March, not the following January.

The definition of madness in VET management is relying on paper logbooks every year and expecting a different result at reporting time. If you want next January to be different, you need to change how you collect placement data today.